I want my first Big Data Cluster TODAY!!!

So, you’ve heard about Big Data Clusters in SQL Server 2019 and want to get some hands-on experience? Let’s get you started today! This article (formally published on PASS Community Blog) will guide you step by step on how to deploy a Big Data Cluster in Azure Kubernetes Services (AKS) from a Windows client. The idea is to get your Big Data Cluster up and running fast without going into detail on every step involved so don’t expect a deep dive on every component along the way. ?

Is there anything I should know before getting started?

As we will focus on how to deploy a Big Data Cluster (BDC), let’s assume that you have a rough idea on what a BDC is.

BDC runs on a technology called Kubernetes – AKS is just one way of using Kubernetes as your base layer to deploy a BDC. While you don’t need to be an expert in Kubernetes, I highly recommend reading The Illustrated Children’s Guide to Kubernetes or – if you prefer a deeper dive – watching this video by MVP Anthony Nocentino: Inside Kubernetes – An Architectural Deep Dive.

We will mainly interact with our BDC through a tool called Azure Data Studio, one of the client tools provided by Microsoft to work with SQL Server. If you are familiar with it, take a look at the official docs.

Finally, as I am lazy, we will mainly deploy through the command line including the installation of our prerequisites. This will happen through a package manager called chocolatey (https://chocolatey.org/).

What will I need for this exercise?

As we will deploy this cluster in Azure Kubernetes Services, you will need an Azure subscription. This can be a free trial as we will not be using a ton of resources (unless you plan to keep this cluster up and running for a while).

As you will need that Azure Subscription anyway, I would also recommend deploying a client VM running Windows 10 or Windows Server just to make sure the prerequisites and tools don’t interfere with anything else you are already running. Deployment would also work from a Mac or Linux machine but would require a slightly different approach – feel free to ping me if you have a need for this.

I chose to deploy a Windows 10 VM using a Standard B2s machine – even if you keep this running for a few days, it will only cost you a few cents per hour. Still, if you decide to just deploy from your existing laptop, you should probably be fine. ?

Can we finally get started?

YES! Let’s start by installing chocolatey followed by the BDC prerequisites.

Connect to the Client you’ll be using to run your deployment and open a new PowerShell window. Run this command to install chocolatey (choco):

Set-ExecutionPolicy Bypass -Scope Process -Force;

[System.Net.ServicePointManager]::SecurityProtocol = [System.Net.ServicePointManager]::SecurityProtocol -bor 3072;

iex ((New-Object System.Net.WebClient).DownloadString('https://chocolatey.org/install.ps1'))

Next, we’ll install those prerequisites (python, kubernetes-cli, azure-cli, python, Azure Data Studio and azdata). You can do this from the same PowerShell window:

choco install azure-cli -y choco install kubernetes-cli -y choco install curl -y choco install python3 -y choco install azure-data-studio -y pip3 install -r https://aka.ms/azdata

The last preparatory step will be a small python script from GitHub which will basically take care of the actual deployment. Close the PowerShell window and open a command prompt. Run the following curl command to download the script:

curl https://raw.githubusercontent.com/microsoft/sql-server-samples/master/samples/features/sql-big-data-cluster/deployment/aks/deploy-sql-big-data-aks.py --output deploy-sql-big-data-aks.py

We’re almost ready to go. To be able to deploy the BDC to Azure, we need to login to our Azure subscription using

az login

This will open a web browser window where you will authenticate using the credentials assigned with your Azure subscription. After you’ve logged in, you can close the web browser. You will see a list of all your subscriptions (if you have multiple) – copy the id of the subscription to be used. Then run the deployment script:

.\deploy-sql-big-data-aks.py

This script will ask you for a couple of parameters, some of which have defaults. For those parameters that have a default value, just stick to those for now.

- Azure subscription ID: use the ID you got from az login

- Name for your resource group (this script will create a new RG for your BDC)

- Azure Region

- Size of the VMs to be used for the cluster

- Number of worker nodes

- Name of the BDC (think of this like a named instance)

- Username for the admin user

- Password for the admin user

After the last step, the script will:

- Create a Resource Group

- Create a Service Principal

- Create an AKS Cluster

- Deploy a BDC to this AKS Cluster

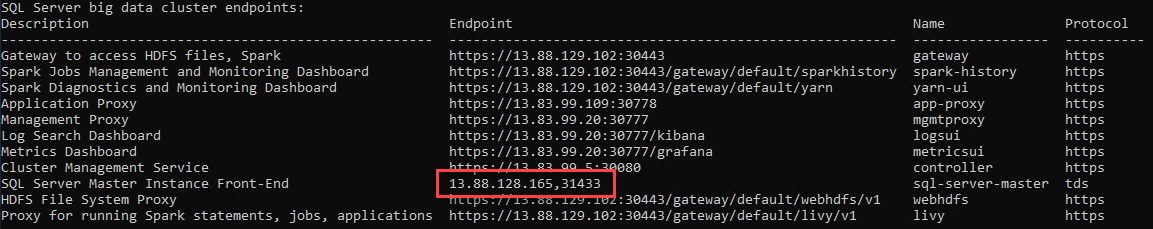

The script will take around 30 minutes and will finish by printing the endpoints of your BDC. This output should look similar to this:

Look for the SQL Server Master Instance Front-End (using port 31433) – you will need it later.

WAIT?! What just happened?

By just running a few commands in a shell, we deployed a full BDC in Azure. All this was made possible using a package manager (choco) and Kubernetes: This combination allows us to deploy a complex system in a very simple way.

You can connect to it, just like every other SQL Server, using Azure Data Studio and the SQL Master endpoint from above.

You are now connected to your BDC and can start working with it.

ADS would also be one way to effectively manage and troubleshoot your BDC.

Instead of using the python script above, we could also have deployed the BDC directly through ADS. Also, the script was calling a tool called azdata which uses two configuration files that define the sizing and configuration of your BDC. We left all those at their default values for now, leaving a deep dive into them for another day.

What if I have additional questions?

This post is part of a mini-series. In the following posts, we will look at how you can leverage the capabilities of your BDC through Data Virtualization or by using flat files stored in the storage pool.

In the meantime, if you have questions, please feel free to reach out to me on Twitter: @bweissman – my DMs are open.